Summary

- Data Integrity: towards the introduction of Data Management

- The link between regulation, quality system & Data Integrity

- Migration in the Cloud & Data Integrity

- Implications of calculating the PDE as the exposure limit for the analysis of risks in shared installations

- The IVDR signals the overhaul of the in vitro diagnosis industry

- Tungsten in the production of prefillable syringes. Also possible without tungsten

The emergence of new texts on data integrity, and their interpretation through audits and inspections, does not in any way eclipse pre-existing regulatory elements, particularly Annex 11 of the EU-GMP

As indicated it is not strictly speaking a new regulation, but rather “… a new approach to data management and control …”. This new orientation highlights aspects already mentioned in existing texts and reminds us that practices have been the subject to lapses either as a result of poor application of regulations, or sometimes through the deliberate commission of criminal offences.

While the scope of data integrity is supposed to extend to all data, of whatever nature, here we will deal in particular with data in their electronic form, especially data understood and manipulated by information systems.

The pioneering character of texts on data integrity therefore comes under awareness building as well as a new way of envisaging corporate responsibility. We will show how the concepts can sometimes repeat points already widely mentioned in pre-existing texts, particularly Annex 11 of the EU-GMP(5), and how certain positions provide very specific clarification. We will also examine the impact of the implementation of Data Integrity on the existing quality system and the changes that need to be considered to respond to this.

But above all, we will identify what represents new clarifications or issues that have already been widely debated.

1. The major principles of Data Integrity

The general concept is now beginning to be well known particularly through the acronym ALCOA (2 – § III 1. A) or its extended version (see ALCOA+). The detailed, commented explanation of each of these letters is sufficiently documented, also we will agree rather to identify new concepts.

1.1 Data, Meta-Data, Trail, Static-Dynamic

Through data integrity, we can see that the way data is viewed is becoming more comprehensive. A data item is not single and self-contained. All signified data depends on other signifiers. So, a weight for example, is only a figure, which has no value unless it is coupled to the metadata which signify its unit. The concept of data is changing, a data item is no longer only the signified, but all data of which it is composed. This data item may also change according to circumstances. The history of the changes to this data item, and the various successive states, are also indissociable. Hence, it is necessary to revise our concept of data to consider that a data item then comprises the signified, the metadata which constitute the signifiers, and the associated histories and the audit-trail. The whole becomes indissociable and becomes the data item. It will also be understood, that depending on the nature of the data, some of these remain unalterable after their creation relative to their medium (we speak of static data as for example “paper” data) and other data can interact with the user (data referred to as dynamic such as electronic data) who can possibly reprocess the data with a view to their better exploitation.

These three concepts, whose indissoluble association represents a new vision, are clearly highlighted in the texts whether this is an FDA document(2 – III – 1 – b,c and d), the PIC/S guide (3 – 7.5, 8.11.2) or the MHRA document, which describes a document in paper format as static data and a record in electronic format as dynamic data (4 – 6.3, 6.7, 6.13)in a clearer manner.

This meshing(see Fig.1) defines a new unit which is then The Data.

1.2 Governance and behavior

Another major point which rapidly appears on reading the texts, is that of the involvement desired at the highest level of the organization (“Highest levels or organisation” 4 – § 3.3, or “Senior Management should be accountable” 4 – § 6.5) in assuming responsibility for the matter of data integrity. It is requested that this task should be the responsibility of management, but also that training cycles and procedures are implemented to this effect. A very particular focus is directed at the behavior of staff and managers in the management of data integrity. The PIC/s guide even establishes a form of categorization depending on the attitude of the company in the matter of employee behavior with regard to problems of data integrity. Does the company display an “Open” culture that is where all co-workers, down to employees, can challenge the chain of command regarding better data protection, unlike a company that displays a “Closed” culture, that is where it is culturally more difficult to question the management or the reporting of anomalies. This is in order to ensure that within an “Open” company, co-workers will be able to avoid all forms of pressure from the chain of command which would be contrary to the requirements of data integrity.

It is understood that the authors of these documents expect different organizations to initiate action plans at the highest level for a real data integrity management program, with an established company policy (drafted in the form of a Policy or a Procedure at the highest level), that management sponsors are designated, that an actual implementation project is set up, that training is carried out for all co-workers to raise their awareness and involvement, and that periodic reviews with possible corrective and preventive actions (CAPA) are put in place.

1.3 Media for data

The union concept of Meta-Data, logically also involves that of the medium. (blank forms – 2 §. 6) must also be controlled. Insofar as the medium also determines a part of the signifier (that is the qualifying information), this medium is also subject to a controlled cycle. Although it is more appropriate to interpret this idea as meaning a paper medium, nonetheless it is also applicable to the electronic format (management of data entry forms, and Excel type matrices (“Templates” calculation matrices often present in laboratories). PIC/S considers this point mainly from the aspect of paper forms (3§8 and following). Note that very often these forms exist in electronic format for the purposes of manual or electronic completion.

1.4 Data Life Cycle

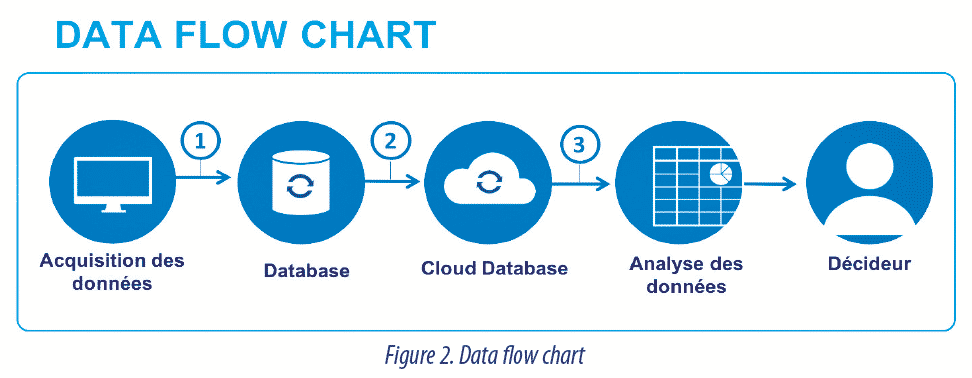

The appearance of the Data Life Cycle concept should also be noted. This aims to account for the entire life of the data item since its generation, its control, storage, any transformations, its use and archiving (4 § 6.6 or 3 § 13.7). This idea of a data life cycle is to be contrasted with the relatively old idea of an application life cycle. It is there that the innovative nature of the concept of data integrity is seen. A data item especially on a computer, exists by itself and not only through the application or applications which generated it and which manipulate it. Hence, it is better understood that the data item which previously was studied and considered only through the application, which had to be validated, is breaking away from “systems” completely to become Information. The spread of computing in companies increasingly encourages these data transfers. Such data, generated by production or monitoring equipment, can very well pass through several applications and finally contribute to the taking of a decision relating to patient safety or product quality (see example Fig 2).

The data life cycle concept lets us understand that it is necessary to ensure that the semantic reach of the data item that will be used to take a decision on patient health and product quality, does not distort the initial value that was generated by the equipment of origin. It is therefore essential to be able to guarantee and demonstrate that successive transformations of the data item have not corrupted the value and that the original data (source data or Raw Data) can always be accessed if necessary.

1.5 Migration of data, data transfer, interfaces

A data item, in its broadest sense, (see the concept of MetaData, Data, Audit Trail above), may be transferred (it passes from one computerized solution to another), moved (it is reinstalled in a new computerized system by the intermediary of what is called data transfer), and may even be regenerated if its storage structure becomes obsolete or cannot be preserved. This operation must of course be subject to an appropriate procedure to verify and document the retention of data quality. Data transfers are understood by PIC/S as an element of the data life cycle(3 § 5.12).

For the MHRA(4 § 6.8) this is a full-blown procedure which must include a “rationale”, a robust validation with the aim of demonstrating the data integrity. Interestingly, the MHRA text emphasizes the risks linked to this type of operation and mentions common errors in this field.

1.6 Existing concepts

Moreover, we find concepts already widely mentioned in European and/or American texts, though this does not reduce their importance.

- The electronic signature (2 § III – 11 ; 3 § 9.3 ; 4 § 6.14 ; 5 § 14), the audit trail(2 § III – 1 c ; 3 § 9.4 ; 4 § 6.13 ; 5 § 9) ; in all cases of modifications and action on data ;

- The various forms of security involving access to data and systems (see below more detail on security)(2 § III 1.e et 1.f ; 3 § 9.3 ;4 § 6.16, 6.17,6.18 ; 5 § 12 and following).

- Mapping/inventory with risk analyses to identify and control systems and their data(3 § 9.21 ;4 § 3.4 ; 5 § 4.3), data flows, timely transitions and changes of an application, at-risk sectors with an assessment of the nature and of the risk.

- Periodic reviews and management of incidents with corrective and preventive actions if necessary(3 § 9.2.1 – 4 ; 4 §6.15 ; 5 § 11, 13).

- The temporality of data and the immediacy of creations (event-data relationship)(3 § 8.4 ; 4 § 5.1).

2. Major principles for the setting up of data integrity in the quality system

It can therefore be seen that, beyond already existing and widely documented elements in texts such as the EU-GMP (see above), new concepts are emerging, which need to be given a little more attention and to be considered particular areas for vigilance for the purposes of establishing a real data integrity management policy in a company.

2.1 Policy

The first point that attracts attention and which proves to be a true innovation is the inclusion of the problem of data integrity at the highest level of the organization. It is necessary to have a policy or a general policy procedure which will incorporate at least the following points:

- The definition of Data Integrity as it is understood in the company and its scope. Without omitting data which are not subject to storage or electronic management or possibly hybrid storage. It is not a question here of rewriting the regulatory texts but rather of delivering an internal interpretation intended for all staff.

- The human resources put in place in terms of organization to manage Data Integrity. For example, is there a Data Management Committee? Or a General Data Manager? The organizational and human principles of data integrity control and notions of individual responsibility faced with the challenge of data preservation, with the idea of entering an open organization similar to recommendations of the PIC/S.

- The establishment of control solutions such as mapping principles, general conditions of risk assessment and appropriate measures to ensure maintenance of the integrity of these data. This involves defining the conditions according to which the data will be exploited in the organization, how they are mapped, how a risk analysis is carried out during their life cycle and the measures taken to ensure their continuity.

- Security must be addressed in the most wide-ranging manner possible, whether this is physical security, logical security, or continuity (even other more extended concepts). With regard to logical security it is also important to take account of the importance of an awareness of the electronic signature. Also to present the means to ensure that all players attach a similar importance to electronic and manual signatures, as required by the texts. That is to stipulate that staff in a position to use an electronic signature have been informed of this and that they are aware that it is equivalent to a manual signature.

- Organizational resources to perform control reviews, incident tracking and the handling of any corrective and preventive actions.

- The conditions associated with modifications, changes, data transfer are well defined. That they enable the possession of the means necessary to ensure that these different operations are indeed performed within the framework of a rationale. That these means ensure in a robust fashion that transfers or modifications of these data have been subject to a satisfactory validation process and do not corrupt the initial semantic value.

- The specific constraints associated with the introduction of computerized solutions, in particular the requirements to be complied with during the setting up of new solutions, with the aim of being able to guarantee the maintenance of data integrity.

This policy must be approved at the highest level and receive a broad consensus in the organization. It must be circulated and be the subject of general staff training. This training must be tracked.

2.2 Mapping/ Analysis of risks

Mapping is a requirement that has been present for a long time in the EU-These two documents may be implemented together. It is however interesting to handle the mapping separately with the aim of developing it in real time and not as and when the solution is put in place. Mapping, which in the past rather had a tendency to present a systems-oriented view must include the data in the form of a Data Flow chart.

An example of a Data Flow Chart is presented in (fig. 3).

A Data Flow Chart must allow identification of:

- The elements that contribute to data generation.

- The resources used to store and protect source data and the resources that permit their restoration, in a rapid and comprehensible manner.

- The places of data transfer and company procedures to ensure that this does not corrupt the meaning of the data.

- Actions put in place to control or mitigate risks, for areas identified as being at risk.

- The complete life cycle from generation to archiving / or deletion where applicable.

The risk analysis must also identify the frequency of reviews, according to the sensitivity of the data involved.

2.3 Security

The principles of security(5 § 12), must be subject to ad hoc procedures and are based on three major components.

- Physical security.

This involves a set of resources and measures which ensure that data cannot be corrupted by physical means. Although large data systems are generally well protected, corruptions or risks can often appear through the intermediary of physically isolated solutions (e.g.: a stand-alone solution which controls an item of equipment where there is easy access to the machine), network peripherals (network technical cabinets, for example), even physical storage items. In theory, this includes problems associated with the quality of the electric current, fire and flood risks, risks associated with animals, temperature, physical access, etc. In the context of data integrity, physical protection of data in paper form should also be taken into account, and access to these data protected.

- Logical security.

This involves all measures which ensure that systems are accessed only by duly authorized persons under the control of a strict record and complete traceability of access. Access to the systems and/or data cannot be anonymous and is necessarily personalized and authorized. Authorization is given on the basis of a documented profile further to tracking of training which ensures that the players are trained in the importance of their actions. Those operating as administrators of systems or data must be trained and the training must be tracked. In this respect, it is interesting to note the position of the PIC/S(3 § 9.3) document on administrators. This emphasizes the sensitivity of this role and asks that the administrator is clearly identified as a person who is not operationally involved in the use of the system concerned. In the good practices, an administrator must have a specialist administration account to carry out administrative tasks which is not confused with their other tasks, and logins as administrator must be tracked and allow the person who logged in to be identified by name. Information relating to logical security, especially the rapid and easy identification of persons in possession of sensitive rights with respect to data integrity (modification of status for example), must be made available for the attention of any auditor or inspector in a rapid and legible manner.

- Continuity.

This involves all measures which allow the planning of preventive or corrective operations, to be performed in the event of an accident or other events, which ensure that regardless of the events that arise there will be no loss of data. Among these operations are found conditions for backup operations, and restoration operations which can also corrupt data irreversibly. The measures are incorporated in activity continuity plans especially the DRP (Disaster Recovery Plan – further to an accident/contingency plan). This very broad subject area is a company matter, which ensures data security as a last resort.

2.4 Behavior

We have seen the importance attached in different texts (especially the PIC/S and the MHRA document) to governance at all levels, particularly to behavior in data integrity management. The enormous difficulty inherent in anything that affects behavior can be imagined. The PIC/S emphasizes this issue and dedicates an entire section to it(§ 6). Raising staff awareness, protecting them against “pressures” which could operate to encourage them to falsify data, are areas that must be controlled. The PIC/S declares “… An understanding of how behaviour influences the incentive to amend, delete or falsify Data and the effectiveness of procedural controls designed to ensure Data integrity…“.

It is therefore important to:

- Consider the setting up of a Data Integrity system within an organization as a program sponsored directly at the highest levels of responsibility within the company.

- Organize actions so that employees are active participants in data integrity.

- Set up appropriate training activities to train them in the importance and fragility of data as well as in key concepts, such as the electronic signature, the traceability of operations on data, audit trail reviews.

- Do not forget to create the means for review, control and correction to readapt.

Inspectors and auditors clearly expect that this commitment is the expression of a corporate culture which ensures that fraudulent actions to corrupt data cannot occur.

Conclusion

Data integrity is an important process which demands extensive readjustment of document and quality systems.

Issues relating to the validation of computerized systems have been very widely debated over the past twenty years. It is true that in the expression information system, the balance has leaned much more towards system than information. This is the first great virtue of this approach, that of having repositioned data at the center of the debate, while emphasizing their importance as well as their fragility and threats.

It is important to understand when this subject takes on a very particular importance in the light of current events, particularly the criminal offenses which have been perpetrated. Even if in the vast majority of cases, the risks encountered by data, are not there for these reasons, the issue is particularly sensitive.

The key to the control of Data Integrity seems therefore to rest on three major lynchpins:

- Behavior and governance: a company affair at all levels, which reflects a quality culture.

- Control of data within company areas through Data Flow Charts and data risk analyses incorporating the “expanded” view of data, that is signified, Metadata and Audit Trail.

- Observance of regulations especially at all levels which involve security in all its aspects and validation..

Share article