Sommaire

- Stratégie de contrôle et robotique : l'avenir des sites de production ?

- Un chargement de cartouches robotisé chez Lilly

- Small, flexible filling and packaging systems - using robots could mean great benefits

- Automated and instantaneous enumeration of viable microorganisms with Red One™, solid-phase cytometry platform

- Method analytical performance strategy in commercial quality control laboratories

- A3P International Congress 2020: Feedback and key messages from the Annex1 revision process panel discussion

- Conformité réglementaire : une réévaluation des relations stratégiques de sous-traitance dans la « nouvelle normalité »

Method analytical performance strategy in commercial quality control laboratories

Method Analytical Performance is a recurrent topic for which commercial quality control laboratories must generate accurate and precise product information through the analytical method life cycle. It is also the core of several guidance documents already published or in draft[1][2]. This article addresses the challenges of developing sustainable control strategies for methods in commercial quality control laboratories.

1. What is method consistent performance?

When an analytical method is introduced into a quality control laboratory, the available data – yielded by the onsite validation or by method transfer exercise [3] – proves the method has been installed with appropriate performance to support its intended analytical purpose. For new products to be submitted, analytical methods are often transferred into quality control laboratories several months prior to the routine operations and commercialization. Therefore, the first challenge of continued performance for that method is to deliver consistent performance over time, despite potentially infrequent use during product approvals.

Once in routine operations, the analytical method will reveal areas for optimization or technique- based improvements and these improvements may require supplemental validation and/or will be subjected to a regulatory variation.

There are two categories of changes for methods, proactive optimization, and continued method robustness evaluations. While the first change category will require data to evaluate the appropriateness of the method performance after the change was introduced, the second category also requires data to demonstrate comparability. Column stationary phases, use of different laboratory consumables, protective filters, change in vendors, introduction of different bench practices or techniques may unpredictably impact the method performance and may require method revalidation.

Therefore, continued performance implies to routinely evaluate method performance in a way that confirms continued control and understanding of the impact of both intentional and unintentional changes. One way to demonstrate method sustainability is to define frequency for each method that is best to time “zoom-in” (e.g. annually, every quarters, every 5-gel column change) to capture continued method performance indicators or trends.

2. What are the tools available to have more insight into method performance?

By design, method performance is method information gathered over time and compared against a consistently tested material. There are indeed different ways to achieve this objective and to evaluate multiple method indicators and trends. Therefore, quality control laboratories should develop a strategy to execute a continued analytical performance evaluation based on comprehensive risk assessment:

- To select in what makes sense and deserves to be routinely evaluated.

- To avoid diluting analysis time on non-pertinent data.

First, a comprehensive risk assessment should be performed for each method. The first stage of this risk assessment may consist of collecting information coming from the following items to identify candidates requiring further insights:

- The tested Critical Quality Attribute (CQA) capability factor level or how tightly the process/method is expected to behave within the specification.

- The method system suitability and any documented failures.

- Any method specific vulnerability or technical focus point like preparation complexity or key milestones.

Second, the continued performance may be further assessed using permanent or periodic follow up materials, which some examples hereafter detailed are the most used. They constitute a toolkit[3] where to choose the most appropriate approach to apply to the identified method candidates coming from the first step of the risk assessment:

A. Control charting the analytical attribute: system suitability samples or control standards are included in the method design to measure system suitability parameters (e.g. resolution, peak asymmetry).

They can also be leveraged to trend their own quality attributes as they can be considered as permanent samples if they have – or approach – the same sample formulation.

B. Control charting the analytical attribute(s) of an additional control sample in each analytical sequence: this provides the same level of detailed information as the previous example, but requires the additional effort of material identification, inventory, testing and quality assurance handling regarding the use of the sample and overall GMP data acceptance. However, one needs to exercise caution as control samples may be susceptible to degradation overtime. This additional challenge may be overcome by simply charting the quality attribute and checking consistency of the ageing profile.

Note – a control sample, on its own, may never be used to define data acceptability.

C. Recording the precision/accuracy of a control sample over a period of time: a control sample may be inserted over a defined time range, or until enough runs have been completed to include data with a minimum number of analysts, columns or equipment setups. This assessment can be repeated periodically to compare quarterly or annually yielded precision data to determine whether continued performance is stable as a function of time. Knowledge of the control sample product in combination with the selected time period is key to gathering accurate information.

D. Control charting the difference of orthogonal or otherwise related analytical attributes by production batch: this may be the case of an in-process test versus the release test obtained after filling or by comparing the outcome of a protein suspension assay versus the average of suspension content uniformity.

This approach provides information on whether the analytical method has the same performance across different tests or laboratories.

It does not follow a single material over a period of time but provides a high level assessment about method performance by examining manufactured materials at different stages or under different attributes.

E. Indirect control charting of the analytical separation consistency (e.g. back front of main peak in SEC-HPLC or capillary electrophoresis of monoclonal antibodies) or control charting the consistency of selected points of 4 parameter sigmoid curves typically used in bioassays. This may be an option to select what is important to prove continued method performance but needs a bridging technical study/rationale to establish the scrutinized analytical method element against the overall method performance for reported quality attributes.

As commercial quality control laboratories have to tightly manage resources along with priority allocations and testing load capacities, while meeting expectations to demonstrate continued method performance, it is key to be intentional in defining the continued method performance verification program.

- Intentionally identify properties of methods to trend and prioritize via the risk assessment.

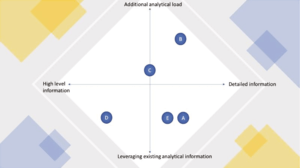

- Intentionally use the appropriate monitoring toolkit to evaluate performance. Figure 1 illustrates how each of the toolkit elements (described above in subsections A through E) used to evaluate continued performance are balanced as an analytical information source versus additional constraints.

- Being intentional by periodically reevaluating the established strategy is key. For example, analysis of data resulting from the first risk assessment may indicate the need to switch to a different toolkit approach. This enables a quality control laboratory to have a periodic, on-going and flexible way to manage its performance evaluation program. As product information evolves, the risk assessment may also change.

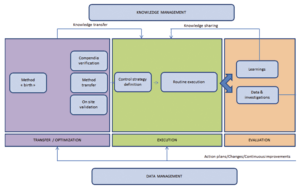

Figure 2 is an attempt to answer questions about relationships between method performance verification and people as well as the relationships between the analysts and method performance.

Data coming from the method during its routine execution and/or continued performance verification program must be managed and result in corrective/preventive action plans and continuous improvement or method optimization.

It is key to also maintain a knowledge management program for testing analysts, because each of the analyzed data sets will bring additional learnings and insights to the executed method.

Knowledge management is a general term that may cover miscellaneous actions, with a unique goal to provide to testing analysts the understanding of standard operating or technique-based instructions for a routine application [4].

Below are two typical examples:

- data feedback:

the collected control charts, profiles, periodic precision measurements should be presented to the testing personnel to demonstrate method control over time, or to identify trends and/or shifts in the data. Control charts foster technical curiosity about operational details, bench organization and prompt autonomy to initiate standardization for laboratory practices across analysts.

- wider knowledge elements

these evolve from in-depth or cross functional investigations. They may be triggered by questions about method performance data and may be captured into method tailored trainings. Training should be delivered to testing personnel to explain how, for example, a protein behaves, the chemistry involved behind given method activities, and/or the method-specific temperature limits. Going beyond the simple execution of the method, it provides analysts an improved understanding of the daily operations.

Investing in method knowledge management not only aligns with GMP expectations to promote standard method understanding across testing personnel[4], but also improves quality control laboratory efficiency and consistency. Robust method performance reaps returns on investment as it improves speed and quality of analytical investigations and encourages method performance improvement.

3. Conclusion

Building a continued method performance verification strategy provides a robust response to the increasing scrutiny about quality control laboratory method performance maintenance. It also drives an action plan to assess the necessity for method optimizations as well as associated revalidation of analytical methods[5]. Building a continued method performance verification strategy is directly aligned with improvements of laboratory efficiency. The established strategy should be streamlined, rely on a defined risk assessment approach and outline a decision tree of the appropriate follow up. Finally, seizing the opportunity to integrate people knowledge management into this strategy is also a valuable incentive to foster scientific understanding, to enhance attention to details and promote continuous improvement, which ultimately results in enhanced method performance.

Partager l’article

Jean-Bernard Graff- LILLY

Jean-Bernard in an expert in Analytical Science. He owns a Ph.D. from the University of Lyon (France). He has 25 years of experience in the field of Protein Analytical Sciences and Quality and works now in the domain of Biomolecule Analytical Stewardship. He joined Lilly France (Fegersheim) in 1996 where he had different assignments in the Quality Control Laboratory. In his current job, he is a Senior Global Consultant at Lilly, having oversight of different bioproduct analytical methods used accross different Quality Control Laboratories in the Group.

graff_jean-bernard@lilly.com

References

[1] USP, General Chapter <1220>, PF46(5), in process revision, Stage 3, “Continued Procedure Performance Verification”

[2] FDA, “Analytical Procedures and Methods Validation for Drugs and Biologics Guidance for Industry”, Rockville (MD), July 2015

[3] B. Pack, D. Sailer, M. Argentine, A. Barker, “Streamlining Method Transfers across Global Sites”, Pharmaceutical Technology 44(9) 2020

[4] Eudralex Volume 4, Good Manufacturing Practice (GMP), Part I Basic Requirements for Medicinal products, Chapter 2

[5] WHO Guideline on Validation –Appendix 4 “Analytical Method Validation”, draft for comments, June 2016